VLM-Based Robotic Manipulation

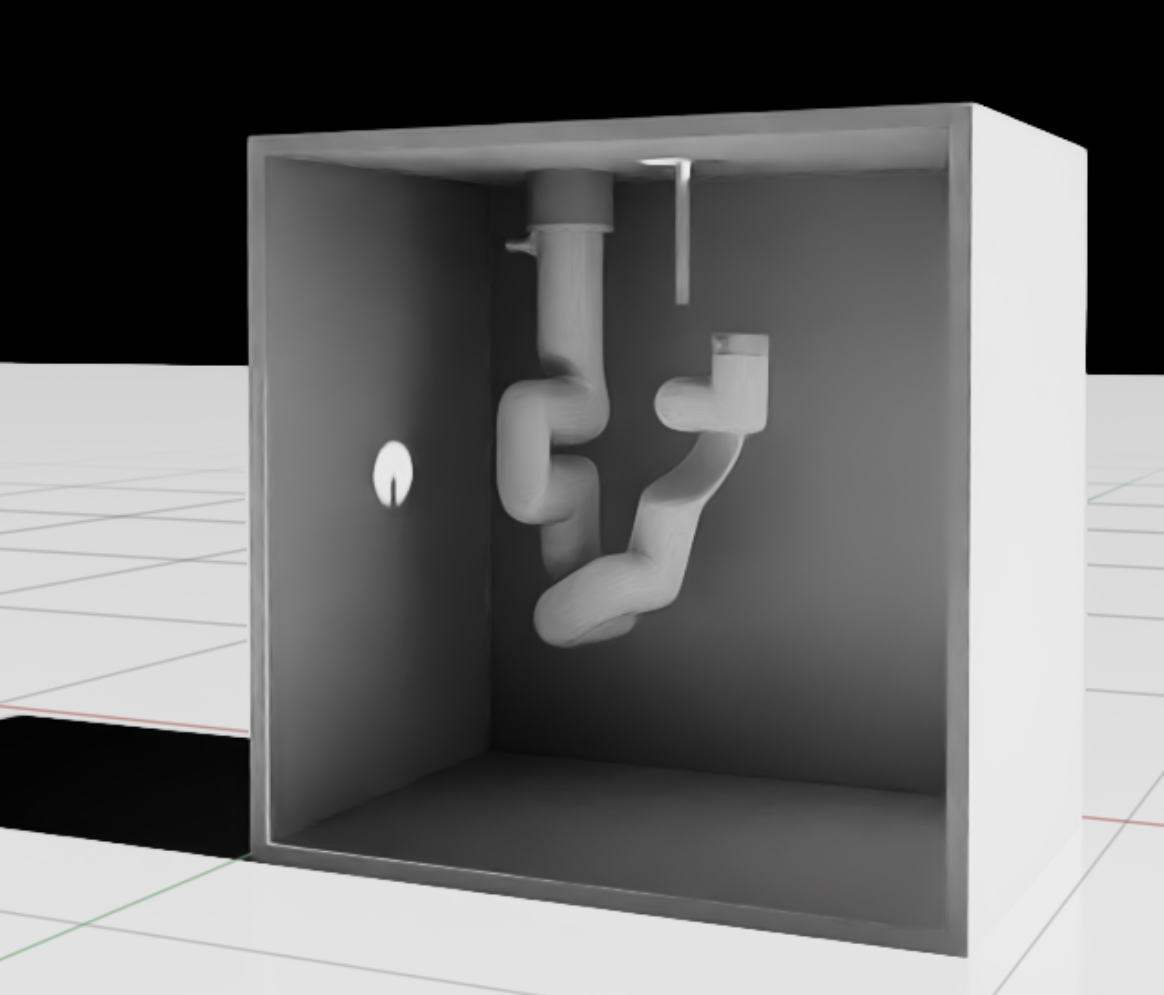

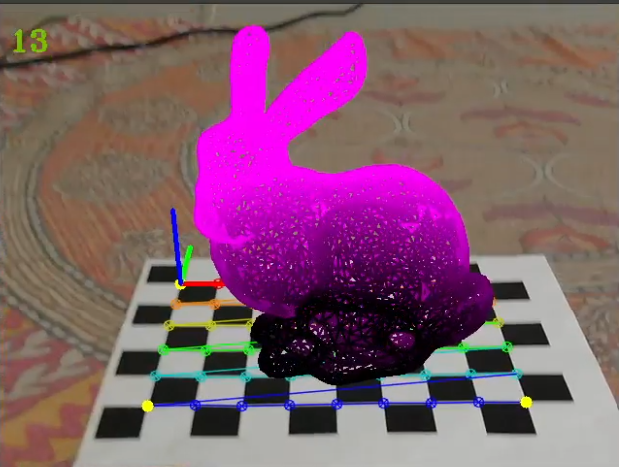

Built an end-to-end pipeline that translates natural language instructions into autonomous robotic manipulation tasks. The system integrates SAM2 computer vision, Google Gemini VLMs, and inverse kinematics control in NVIDIA Isaac Sim. Through systematic evaluation of three perception approaches and multiple VLM models, I optimized detection accuracy from 30% to 94% while reducing inference time by 3x. Implemented self-verification mechanisms enabling automatic error recovery, achieving 100% success on trained tasks and 60% generalization to novel instructions across 45 evaluation episodes.