IoT-Based Autonomous Patrol Bot

Team: Adnan Amir, Aaryaman Chandgothia, Moksh Goel

Supervised by: Dr. Venkatesh Deshmukh, Prof. Nirmal Thakur

Addressing a Real-World Security Gap

Traditional security measures, like CCTV cameras, often fall short due to human factors such as guard fatigue, especially during night shifts. This project, the "IoT Based Patrol Bot," was conceived to tackle this prevalent issue, particularly for small and medium-sized businesses where advanced security solutions can be cost-prohibitive. The core motivation was to develop an affordable, autonomous, and reliable auxiliary security system that complements existing setups.

System Overview & Core Functionality

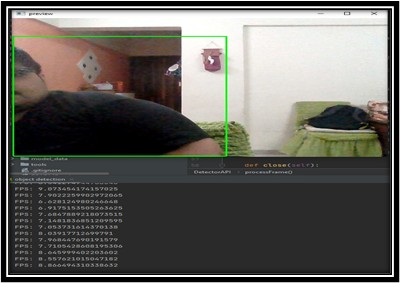

The Patrol Bot is a compact, 3D-printed rover designed for stealth and autonomous operation. Its primary function is to patrol a designated indoor area after hours. Upon detecting human presence using its onboard camera and OpenCV-based object detection, the bot triggers a multi-channel alert system via the ThingSpeak IoT platform. This ensures that relevant personnel are notified immediately through various means, including email, Twitter, MQTT desktop notifications, and even VOIP calls orchestrated through IFTTT.

🎥 Project Showcase Video

Watch the Patrol Bot in action and see its core functionalities demonstrated in the video below. This showcase highlights its autonomous navigation, object detection capabilities, and the integrated alert system.

Key Technical Highlights & Design Philosophy

The project followed a mechatronic design approach, with concurrent development and integration of mechanical, electronic, and software systems.

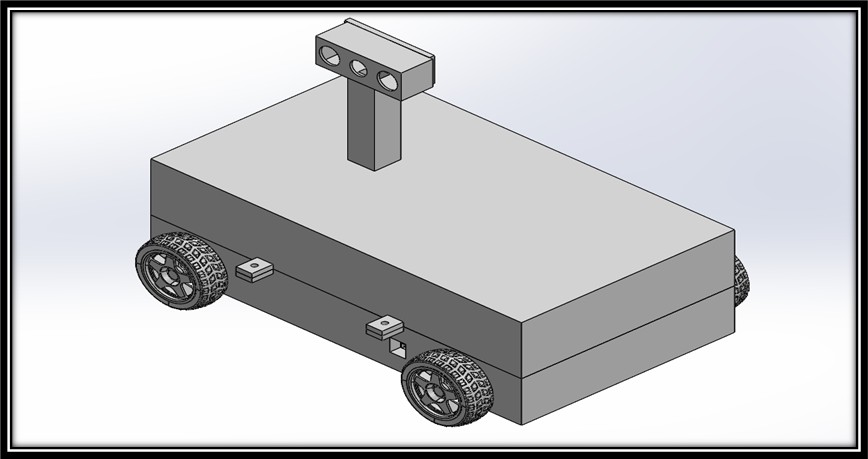

Mechanical Design

- The chassis is 3D-printed with black filament for stealth and ease of replacement.

- It houses all components internally, including microprocessors, motor driver, battery, and sensors, with accessible ports for charging and reprogramming.

- A 2WD system with two driven rear wheels and supporting front wheels was chosen for cost-effectiveness and navigational efficiency.

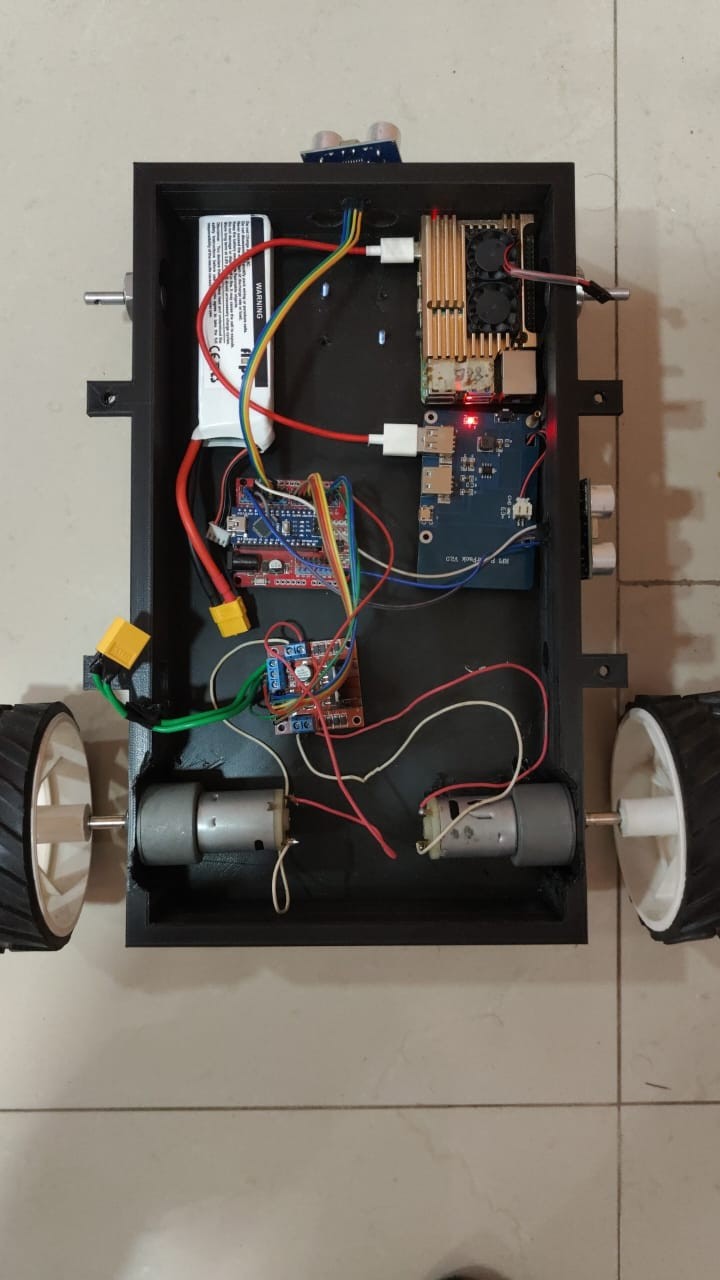

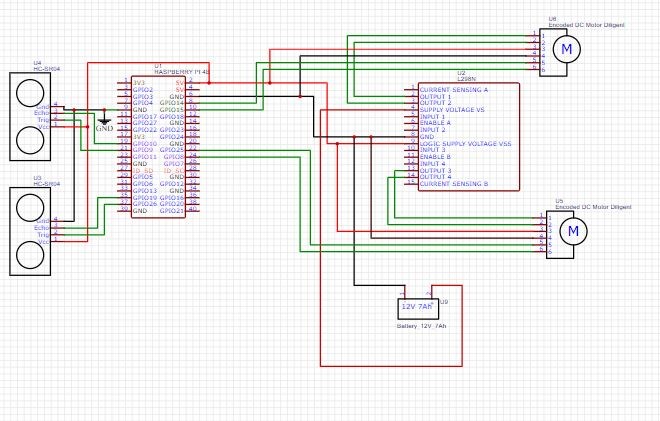

Electronic System

- Processing: A Raspberry Pi 4 Model B handles the computationally intensive object detection, while an Arduino (Nano or Uno) manages the navigation algorithm. This decentralized approach enhances responsiveness and reliability.

- Motor Control: An L298N dual motor driver controls the DC motors.

- Sensing (Navigation): Two HC-SR04 ultrasonic sensors are used: one front-facing for obstacle detection and turning decisions, and one side-facing for a (previously explored) PID-based wall-following algorithm.

- Power: A rechargeable 11.1V 2200mAh LiPo battery powers the system.

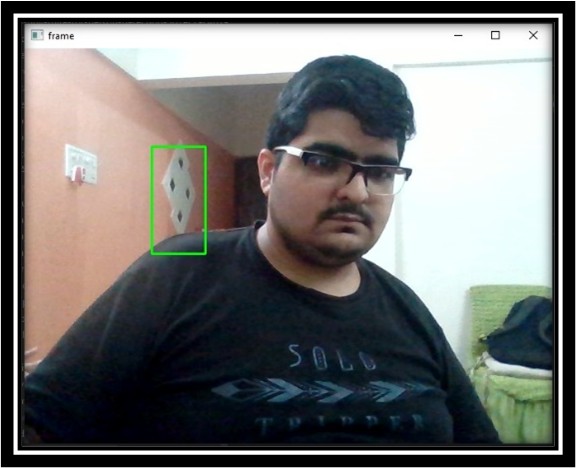

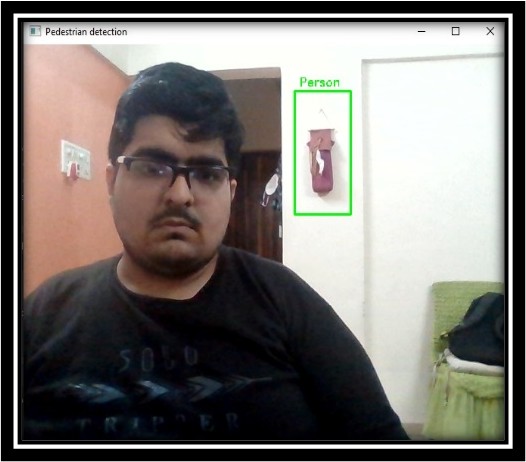

Detection System

- Algorithm: After evaluating various methods (HOG, Cascade Classifier, RCNN, SSD), the Single Shot MultiBox Detector (SSD) with an Inception v2 neural network was selected for a good balance of speed and accuracy on the Raspberry Pi. The system was trained primarily on the COCO dataset.

- Camera: A Pi camera with an adjustable mount and No-IR filter was used for night vision capabilities.

Comparison of different detection methods explored:

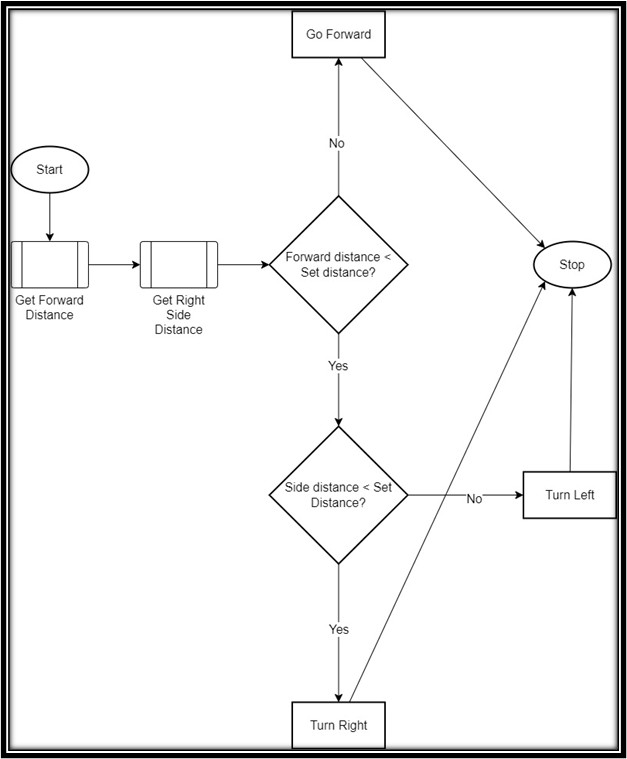

Navigation System

- Initial explorations included PID-based wall following.

- The final implemented navigation strategy is a Random Exploration Algorithm. The bot moves straight until an obstacle is detected by the front ultrasonic sensor. It then attempts to turn right. If the right is also blocked (detected by a conceptual right-side sensor, or inferred), it turns left. This simple yet effective algorithm aims to maximize room coverage with minimal sensor complexity, ensuring the camera captures various angles.

- The navigation system is deliberately isolated from the vision system to ensure mobility even if the vision processing encounters issues.

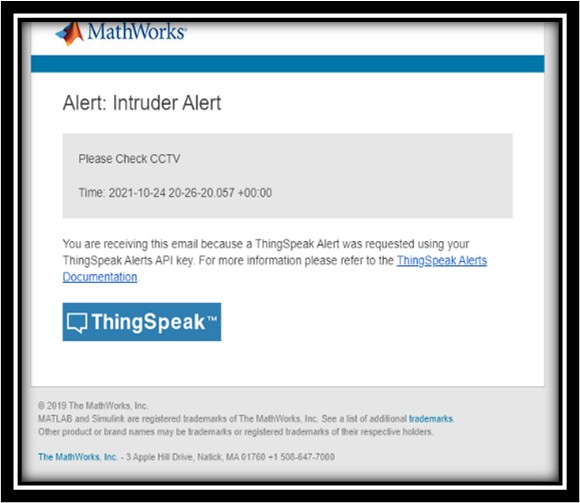

IoT System & Alerting

- Platform: ThingSpeak is the central IoT platform used for data logging and triggering alerts.

- Alert Mechanisms:

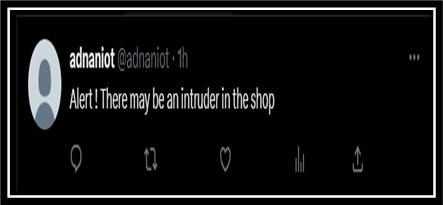

- ThingSpeak React & ThingTweet: Sends Twitter alerts.

- ThingSpeak React & MATLAB Analysis: Sends email alerts.

- ThingSpeak MQTT & MQTTX: Provides instantaneous desktop notifications.

- IFTTT Integration: Leverages ThingSpeak webhooks to trigger IFTTT applets for:

- Forwarding Twitter alerts to Telegram.

- Initiating VOIP calls to a designated device.

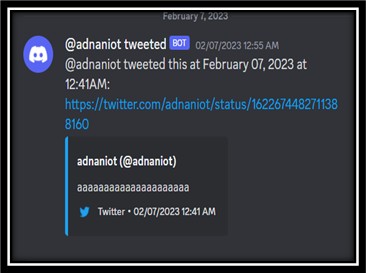

- Sending alerts to Discord.

- This multi-layered alert system provides redundancy, increasing the likelihood of an alert being noticed.

Examples of specific alert types implemented:

Challenges & Learning

The project involved overcoming challenges such as optimizing object detection performance on a Raspberry Pi and refining the navigation strategy for effective coverage. The initial PID wall-following approach proved computationally intensive for the hardware, leading to the adoption of the simpler random exploration method. Integrating multiple IoT services and ensuring reliable alert delivery across different platforms was also a key learning experience.

Conclusion & Future Scope

The IoT Based Patrol Bot successfully demonstrates a cost-effective, autonomous solution for enhancing security. It effectively integrates object detection, mobile robotics, and a robust IoT-based alert system.

Future development could explore:

- Upgrading navigation with LiDAR for SLAM capabilities.

- Developing a unified custom application for streamlined alerts.

- Investigating alternative IoT platforms like Blynk.

- Incorporating mecanum wheels for improved maneuverability in tight spaces.

This project serves as a strong example of applying mechatronics engineering principles to solve a tangible real-world problem, emphasizing system integration, IoT connectivity, and autonomous decision-making.